AI Image Generation - The Fun Stuff and a Few Ethical Questions.

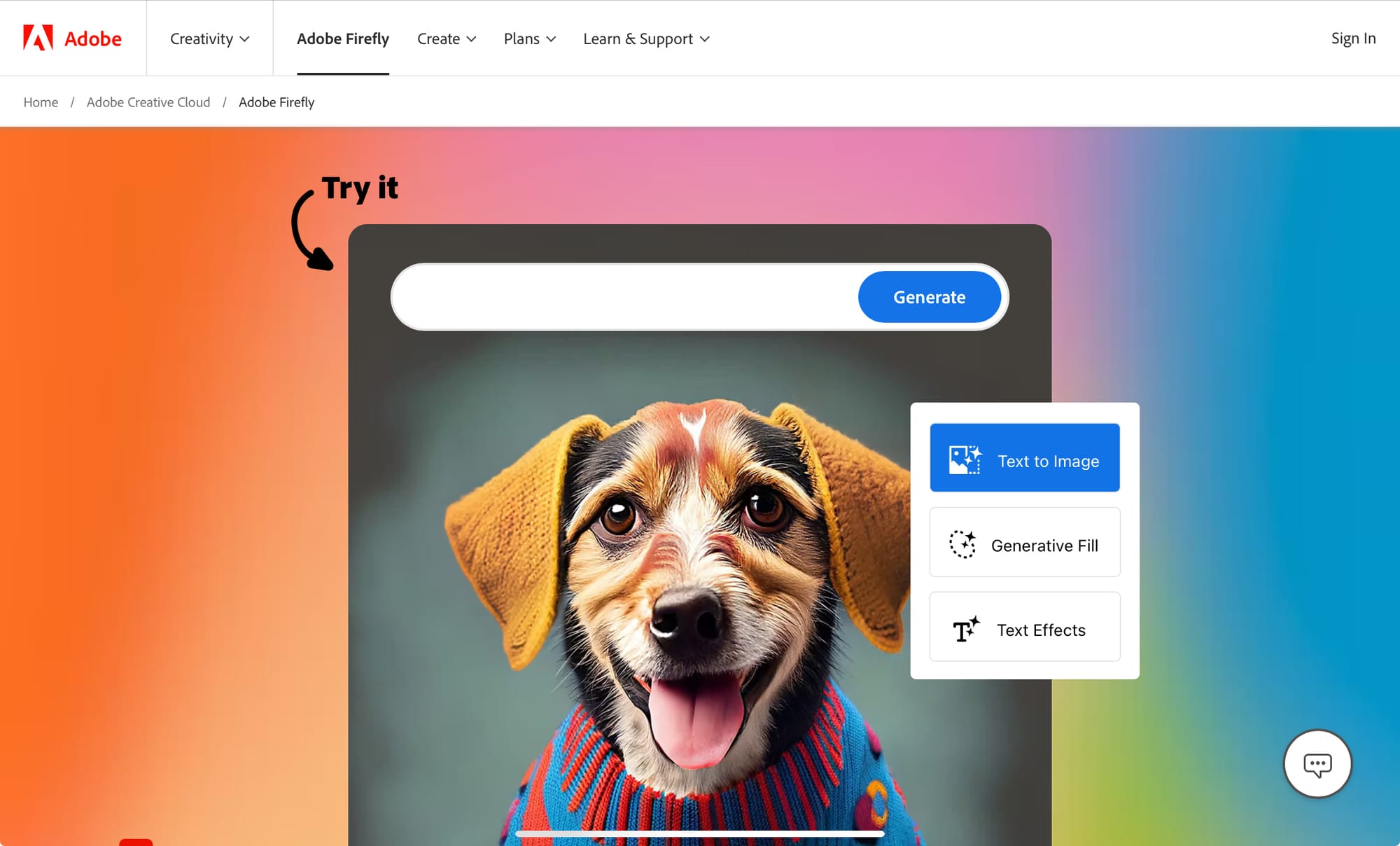

I’ve started taking a closer look at AI generated imagery more recently, firstly out of a sense of fun, but also due to a curiosity of the ethical issues. Spoiler alert ... I didn't create any of the images 'from scratch' for this post. I faked it all with Adobe Firefly 2.

For those that know me, while I’ve got a deep appreciation for all things creative, I’m certainly more of technical person. I am familiar with the mechanics of using tools such as Photoshop due to my earlier years as a digital media developer, where I would ‘cut up’ images created in Photoshop and bring them to life in all manner of things such as interactive kiosks, demos, CD-ROMs and the like.

While I could compose a specific user interface view by configuring the visibility of appropriately labelled layers, I never really ‘created’ anything original of my own. More lately I’ve been using Lightroom to enhance images due to my growing interest in photography. But 'creating' images myself is just something else.

One evening I was curious as to what ‘Firefly’ was all about after seeing it pop-up on my Adobe Cloud homepage. The tool comes as part of a paid-for subscription. I was amazed that I could just type in something and the software would turn out an image based on my prompt text. The results were pretty amazing indeed …. I could specify the ‘what’ through a text prompt and Firefly would take care of the ‘how’.

For somebody like me, on the surface it looked like a game changer … the ability to create new images for this site quickly and easily, with very limited artistic skills in the conventional sense. All manner of tweaks and options in Firefly can help to create everything from extremely detailed photos to futuristic cyberpunk style art. All you need is imagination and the ability to express this via a prompt.

While it was indeed fun, there were a few drawbacks - some more obvious than others. First of all, due to the sheer number of configuration options in Firefly and its ability to interpret very detailed prompts, the results were unpredictable. I suspect that the lack of predictability is actually part of the attraction though.

If you look really closely at the image at the top of this article, the computer equipment looks like it was stuck in an oven beforehand (it's a wonder the bear didn't complain about burns). Things look a bit more complicated with the image below:

It looks like there is a human hand trying to escape from the bear. Mildly creepy. Obviously the AI generated image had used some other real image as a basis to create something 'unique' for me. That image was obviously scraped from somewhere else - such as a stock photo library or from someone's personal site where they could have created the actual original image themselves.

Did Adobe get the permission from the publisher of the original content? I can't answer that, but it reflects a bigger argument around generative AI platforms where large amounts of original material were scraped from sites without the knowledge or permission of the site owner.

While some may argue that if you publish something on the Internet, then it is fair game for other people to copy it. But what if it is copied and used to train AI machine learning models on an industrial scale by a service that ultimately seeks to make money? Will the original creators get paid for their efforts? I doubt it. There are quite a few law suits happening because of the very same issue.

I doubt any of my concerns will stop generative AI tools from becoming more popular - either for fun meme generation or commercial purposes. Permission as well as payment and attribution to original creators shouldn't be forgotten about though.

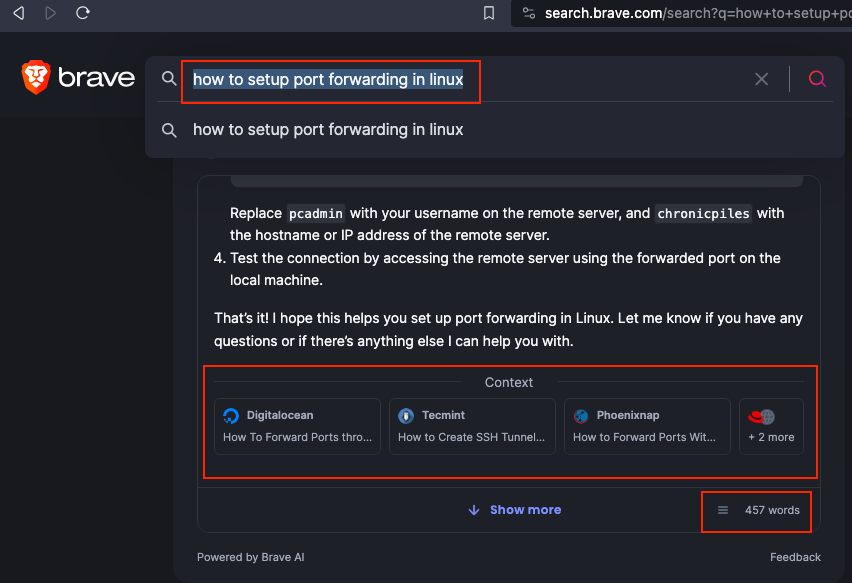

Although specific to 'conventional' text-based generative AI tools, I do believe that that the approach taken by Brave to integrate AI with search capabilities is a great step in the right direction. From the image below, you can see that I searched for how to setup port forwarding in linux.

There was an impressive amount of step-by-step instructions returned - 457 words. On first glance, it looked pretty comprehensive (I didn't reproduce the steps on how to setup port forwarding), but it was a nice touch on the part of Brave to quote their sources at the very least.

There is also something more important than attribution at stake here - transparency. It helps the user to determine if the content is trustworthy and accurate. Those two words I fear could get lost in the run-up to elections, which is certainly going to see generative AI being used (abused?) as a tool (weapon?) to aggressively pursue votes.